We are excited to announce that the Megh Video Analytics Solution (Megh VAS) Beta 1 Release is available! The Megh VAS Beta 1 Release extends the earlier Megh VAS alpha releases.

The alpha releases showcased inline processing of various streams, including filtering and transformations of data. For example, the Megh VAS Alpha Release for Intel performed video decode and image classification inline across two Intel Arria 10 FPGA Programmable Acceleration Cards (A10 PACs) connected via a breakout cable. The packaged demo showed how such a solution could be useful for fraud prevention in a retail supply chain.

What’s new for this release?

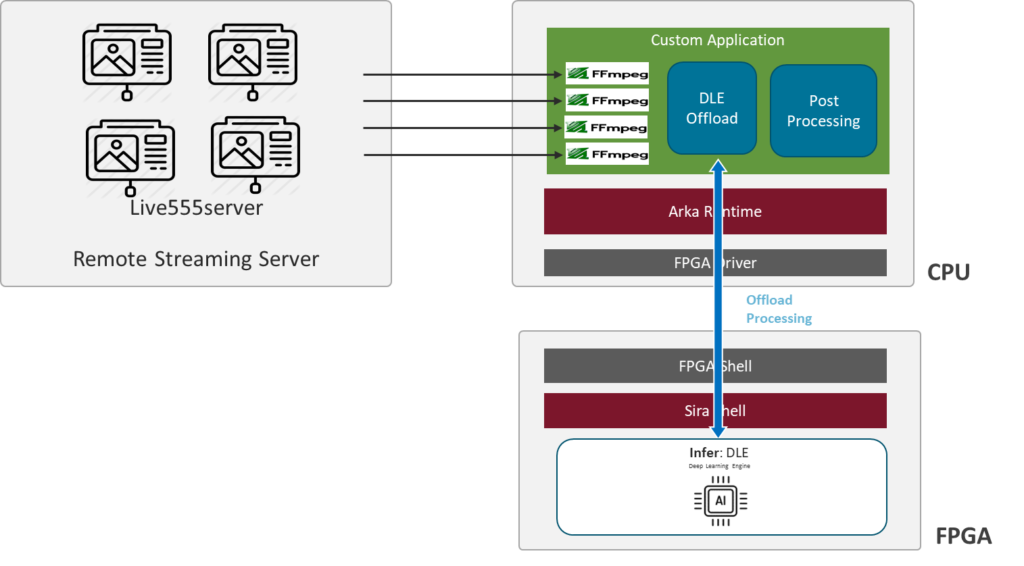

The Megh VAS Beta 1 Release showcases offload processing to accelerate compute-intensive deep learning functions. It offloads object detection and classification for multiple channels to a single FPGA that has been programmed to implement a MobileNet SSD (Single Shot Detector) detection network. The packaged demo performs simple post-processing (on the CPU) to demonstrate object tracking within each frame. Note that more elaborate post-processing could be implemented to track objects across frames, and would be interesting for inventory intelligence and smart surveillance use cases.

About the demo

The Beta 1 demo is easy to install and configure, requiring only one server with a single Intel or Xilinx FPGA card. An RTSP server is used to simulate video streams incoming from live cameras. The test video was engineered to simulate four camera feeds by splitting a 30 fps camera video downloaded from YouTube. The demo system runs the RTSP server, the CPU-only, and the CPU+FPGA modes simultaneously.

In CPU-only mode, FFMPEG is decoded into RGB frames on the CPU, followed by MobileNet SSD object detection, followed by post-processing to implement tracking within a frame. In CPU+FPGA mode, the flow is the same, except that the MobileNet SSD object detection portion of the pipeline is offloaded to the FPGA, as shown in the above figure.

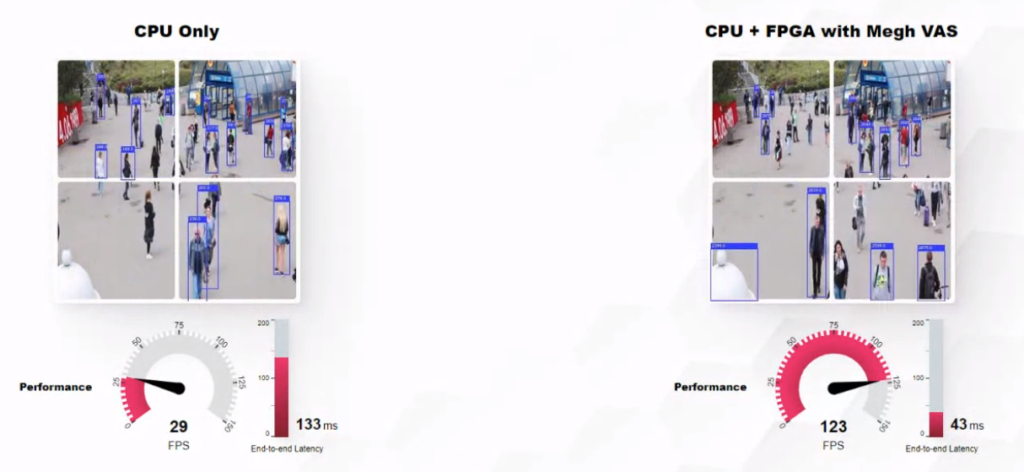

The demo output shows object detection and tracking over four channels of video, with each channel displayed in a cell of a 2×2 grid. The display output from both modes is shown side-by-side in a web GUI, as shown below. The demo shows that the CPU+FPGA solution delivers more than 4x the performance in less than 1/3 of the end-to-end latency of the CPU-only solution.

What’s coming next?

Future VAS releases will focus on expanding the number and types of DLE topologies that are implemented and optimized in the Megh libraries. In parallel, support for the Megh Software Development Kit will be released, including support for multiple language bindings to enable custom model generation and deployment. Future VAS demos will showcase how the Megh solution provides accelerator-as-a-service functionality for both inline and offload processing models, and scales across multiple FPGAs.

Megh solutions will also be expanding beyond VAS into other areas, such as text and speech analytics.